This is the last post of a series that aims at presenting a proposal for a cheap but yet (I think) robust solution for the centralization of Documentum platforms’ logs, and which can be of great help for someone who would like to implement a non-intrusive but yet very powerful real-time monitoring system.

Part-2 of this posts’ series was presenting the steps to go for in order to instrument the Webtop and JMS components. This post will give the steps to instrument the following components: Content Server, Docbroker, xPlore and CTS (Content Transformation Services).

Note that I did not go for instrumenting Documentum D2 or Documentum XCP for the moment, but I hope I will be able to find some time to do this in the future.

Generally speaking, the steps to go for in order to forward all logs from the components listed here above to our logging server (logfaces) are simple:

- for “legacy” components which do not have any advanced logging capabilities, forward file-based logs using a syslog-compatible agent

- for components which such capabilities, modify their logging configuration to add a logfaces appender and use it to forward logs

Content Server logs forwarding:

As said in Part-1, the logfaces server is compatible with syslog. Any syslog-compliant agent can therefore forward logs to it. There are many articles describing the steps to configure syslog (clients) on Unix/Linus systems so I will not go into describing this (you may have a look at the following article).

It is actually more complicated to find a reliable syslog forwarding agent on Windows. I studied several of those and found logstash to be the most reliable and less intruisive one. Logstash has only one requirement: Java. As always, making logstash a Windows service is a good idea. Most of the time I use yajsw to do so.

Here is an example logstash configuration file to forward content server logs and docbrokers logs to our logfaces log server:

input {

file {

path => ["D:/documentum/dba/log/CS*.log"]

}

file {

path => ["D:/documentum/dba/log/*.Docbroker.log"]

}

}

output {

syslog {

appname => "DEV-Docbase"

facility => "kernel"

host => "<logserver_name>"

port => 514

severity => "informational"

}

}

In this example, we have several content servers running on the same machine, with one docbroker for each content server and we forward all content servers’ and docbrokers’ logs to our logfaces server, with “informational” severity.

And here is how those logs will appear in the logfaces client interface:

Note that logfaces also provides an alternative way for logs files to be ingested: the so-called drop zones, which are consist in regularly copying log files into locations made available to the logfaces server. Those files are then parsed and converted into logging events.

xPlore (full text) component instrumentation

In order to instrument xPlore, we will actually instrument the following xPlore subcomponents:

- the Index Agent, which is responsible for the actual handling dmi_queue_item requests and extracting content and metadata

- the CPS (Content Processing Service) which is responsible for the actual content processing

- the xDB component which actually stores the result of the indexing

- the DSearch component which handles actual search requests

The Index Agent has its own JBoss server (DctmServer_Indexagent). The CPS, xDB and DSearch components share the same JBoss instance (DctmServer_PrimaryDsearch).

In order to instrument the IndexAgent:

- copy the lfsappenders-X.Y.Z.jar file (which contains logfaces appenders) to the DctmServer_Indexagent\deploy\IndexAgent.war\WEB-INF\lib folder

- modify the log4j.properties configuration file contained in folder DctmServer_Indexagent\deploy\IndexAgent.war\WEB-INF\classes as follows

log4j.rootCategory=WARN, F1, LFS

log4j.category.MUTE=OFF

log4j.category.com.documentum=WARN

log4j.category.indexagent.AgentInfo=INFO

log4j.category.com.documentum.server.impl=INFO

log4j.category.no.fast.connectortoolkit=INFO

log4j.category.com.emc.documentum.core.fulltext=INFO, F1, LFS

log4j.additivity.com.emc.documentum.core.fulltext=false

log4j.appender.A1=org.apache.log4j.ConsoleAppender

log4j.appender.A1.layout=org.apache.log4j.PatternLayout

log4j.appender.A1.layout.ConversionPattern=%d{ISO8601} %p %c{1} [%t]%m%n

log4j.appender.F1=org.apache.log4j.RollingFileAppender

log4j.appender.F1.File=D\:/xPlore/jboss5.1.0/server/DctmServer_Indexagent/logs/Indexagent.log

log4j.appender.F1.MaxFileSize=10MB

log4j.appender.F1.MaxBackupIndex=5

log4j.appender.F1.layout=org.apache.log4j.PatternLayout

log4j.appender.F1.layout.ConversionPattern=%d{ISO8601} %p %c{1} [%t]%m%n

log4j.appender.LFS=com.moonlit.logfaces.appenders.AsyncSocketAppender

log4j.appender.LFS.application=TST-xPlore-IndexAgent

log4j.appender.LFS.remoteHost=logfacesserver

log4j.appender.LFS.port=55200

log4j.appender.LFS.locationInfo=true

log4j.appender.LFS.threshold=ALL

log4j.appender.LFS.reconnectionDelay=5000

log4j.appender.LFS.offerTimeout=0

log4j.appender.LFS.queueSize=100

log4j.appender.LFS.backupFile=D\:/Temp/lfs-indexagent-backup.log

Here is how the logs will appear in the logfaces client interface:

In order to instrument the CPS, xDB and DSearch components:

- copy the lfsappenders-X.Y.Z.jar file to the DctmServer_PrimaryDsearch\deploy\dsearch.war\WEB-INF\lib folder

- modify the logback.xml configuration file contained in folder DctmServer_PrimaryDsearch\deploy\dsearch.war\WEB-INF\classes as follows

...

<appender name="LFS-DSEARCH" class="com.moonlit.logfaces.appenders.logback.LogfacesAppender">

<remoteHost>your_logfaces_server_name</remoteHost>

<port>55200</port>

<locationInfo>true</locationInfo>

<application>TST-xPlore-DSEARCH</application>

<reconnectionDelay>1000</reconnectionDelay>

<offerTimeout>0</offerTimeout>

<queueSize>1000</queueSize>

<appender-ref ref="DSEARCH" />

<delegateMarker>true</delegateMarker>

</appender>

<appender name="LFS-CPS" class="com.moonlit.logfaces.appenders.logback.LogfacesAppender">

<remoteHost>your_logfaces_server_name</remoteHost>

<port>55200</port>

<locationInfo>true</locationInfo>

<application>TST-xPlore-CPS</application>

<reconnectionDelay>1000</reconnectionDelay>

<offerTimeout>0</offerTimeout>

<queueSize>1000</queueSize>

<appender-ref ref="CPS" />

<delegateMarker>true</delegateMarker>

</appender>

<appender name="LFS-XDB" class="com.moonlit.logfaces.appenders.logback.LogfacesAppender">

<remoteHost>your_logfaces_server_name</remoteHost>

<port>55200</port>

<locationInfo>true</locationInfo>

<application>TST-xPlore-XDB</application>

<reconnectionDelay>1000</reconnectionDelay>

<offerTimeout>0</offerTimeout>

<queueSize>1000</queueSize>

<appender-ref ref="XDB" />

<delegateMarker>true</delegateMarker>

</appender>

<root level="WARN">

<appender-ref ref="DSEARCH" />

<appender-ref ref="LFS-DSEARCH" />

</root>

<logger name="com.emc" additivity="false">

<appender-ref ref="DSEARCH"/>

<appender-ref ref="LFS-DSEARCH" />

</logger>

<logger name="com.emc.cma.cps" additivity="false">

<appender-ref ref="CPS"/>

<appender-ref ref="LFS-CPS" />

</logger>

<logger name="com.emc.cma.cps.util.ProcessOutputStreamReader" additivity="false">

<appender-ref ref="CPS_DAEMON"/>

</logger>

<logger name="com.xhive" additivity="false">

<appender-ref ref="XDB"/>

<appender-ref ref="LFS-XDB" />

</logger>

</configuration>

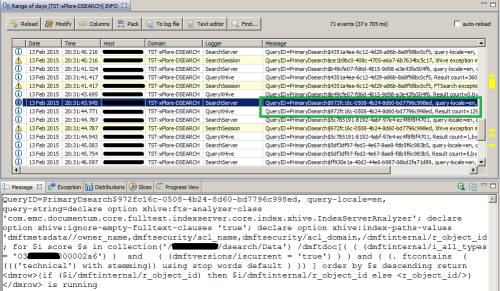

And here is for example how the DSearch logs will look like in the logfaces client interface:

Content Transformation Services (CTS) instrumentation

In order to instrument CTS:

- Copy the lfsappenders-X.Y.Z.jar file to the \lib folder

- Modify the command for the CTS Service by adding this lfsappender-X.Y.Z.jar library to the –Djava.class.path option in the \HKEY_LOCAL_MACHINE\SYSTEM\ControlSet001\Services\DocumentumCTS\Parameters\AppParameters parameter (see below)

- Modify the DFC_HOME\config\log4j.properties file to add a new appender

... log4j.category.com.documentum.cts.impl=INFO, CTSServicesAppender, LFS log4j.category.com.documentum.cts.services=INFO, CTSServicesAppender, LFS log4j.category.com.documentum.cts.util=INFO, CTSServicesAppender, LFS log4j.category.com.documentum.cts.util.CTSInstanceInfoUtils=INFO, CTSInstanceInfoAppender, LFS ... #LogFaces log4j.appender.LFS=com.moonlit.logfaces.appenders.AsyncSocketAppender log4j.appender.LFS.application = TST-CTS log4j.appender.LFS.remoteHost = <logfaces_server> log4j.appender.LFS.port = 55200 log4j.appender.LFS.locationInfo = true log4j.appender.LFS.threshold = ALL log4j.appender.LFS.reconnectionDelay = 5000 log4j.appender.LFS.offerTimeout = 0 log4j.appender.LFS.queueSize = 100 log4j.appender.LFS.backupFile = D\:/Temp/lfs-jobmanager-backup.log

And here is how the logs will appear in the logfaces client interface:

Note that the accuracy of those CTS logs are not perfect as they do not print for example the rendered document id. It could be putting some specific class to DEBUG may give some more verbose logs.

Using logfaces alerting and reporting capabilities:

Logfaces has two interesting out-of-the box features:

- real-time SMTP notifications which can be triggered when logfaces detects some event(s) match certain criteria (example: send an email notification if logfaces receives more than 10 times in a period of 20 minutes and which contain the “ERROR” message).

- Reports which can be generated from the logs collected by logfaces database and sent by email

Conclusion

This is the end of the series of three posts which I hope may be useful for people who would like to centralize the logs of the different components of a Documentum platform. My primary goal is to actually present a logging system (and the associated logfaces tool) which I tested and found really robust with close to no impact on performance. The association of both logfaces appenders, the logfaces log server and the MongoDB database has proven to be an excellent solution for Documentum logs centralisation, for a limited effort. I hope you will give it a try, as it is really worth the time you will invest in studying it.

The provided solution can certainly be improved and I invite any reader to provide feedback so that the solution actually improves. As an example, I recently had an interesting exchange with Andrey Panfilov who proposed what could be a better solution in terms of Webtop logs verbosity and which I will certainly investigate to see whether its performance can make it a possible alternative to the servlet filter based solution I previously presented.

Reblogged this on Documentum in a (nuts)HELL.

LikeLike

Stephane, could you please explain some points…

1. What is the advantages/disadvantages of Logfaces over “competitors” (basic googling gave me following suggestions: Splunk, HP-Arcsight Logger, IBM QRadar Logger, LogLogic, AlienVault, logstash+ elasticsearch+kibana, graylog + elastiucsearch)?

2. What are you going to do with logs? Let me share my insights on the question. From my (i.e. developer’s) perspective I see the only point to store log files somewhere for a long period of time: when customer tries to speculate over the fact, that recent activities (like upgrade/update/etc) caused some troubles in their environment, in this case I’m interested to analyze old logs to find out whether disputable errors are caused by recent activities or not. From administrator’s perspective I see more points but all of them are questionable:

* security audit – documentum software does not log enough information about security-related incidents

* performance analysis – in most cases it is enough to collect required information during one day to be able to make some conclusion about performance problems

* triggering notifications when some patters exceed thresholds – this is a good example, but the problem is you don’t know the “context” of error (i.e. you don’t know how to reproduce it), so, you need to interact somehow with business users

LikeLike

Hello Andrey,

1. I think I gave some explanations on the logfaces choice in this section of Part-1 but also when answering Pierre Huttin’s question about Graylog2.

If I had to summarize, I would say the big advantages of logfaces are:

– its price. Actually its overall cost of ownership. About 1000$ for such a tool is just astonishing from my own point of view. Commercial solutions are much more expensive (look at the Splunk price model for example). For open source solutions, I could be wrong but I personnally think that maintaining/administrating/configuring such tools require at least a significant effort, at most a dedicated team. As I told Pierre, if you have such a team in place, with some experience on a tool like Splunk or ELK, use it.

– its hard client with is both fast and really easy to use. There is no need to learn any search language (whether you call it “search filters”, “search queries” or “search commands”) to perform queries on collected data. In general, I’m not a big fan of web-based administration tools, especially when it comes to data analysis (but I could be wrong). Actually the best I would recommend is to test the GUI. I tried Splunk’s interface and I know how elasticsearch interface works, there is just no way our developers would use it.

– it just works. It suits my needs in terms of performance, stability and user friendlyness. Maybe not the perfect solution but in my Documentum context, it just fits perfectly.

2. I started writing an answer to this one but after a while I thought it could be interesting to write a real post about it, with real-life examples. I will therefore reply to your question with an independant post, as soon as possible.

Stephane

LikeLike

Hello Guys,

Thank you very much for the excellent post. Collecting and monitoring information form Documentum’s logs is a terrifying work; this tool could make administrators life a lot easier.

LikeLike